Lexcode’s Hyperlation Team specializes in researching about and using ATPE or Automated Translation and PostEditing. ATPE is the process of combining the efficiency and accuracy of artificial intelligence (AI) translation and the excellence of human editing, and is Lexcode’s upgraded take on the usual machine translation postediting (MTPE). It regularly substitutes human translations of various documents with AI translation engines to understand the strengths and weaknesses of such evolved tools. Lexcode also utilizes the efficiency and potential of AI and machine translation, and, today, I would like to share a comparative review of the translation of three of the world’s biggest translation engines available—a typical test bed of AI translation.

Test Basics

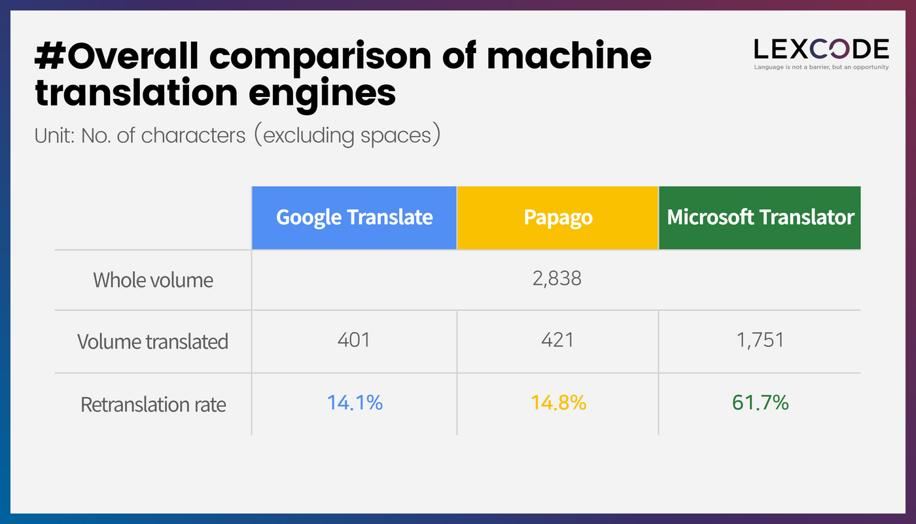

First, a test was conducted to compare the quality of Google Translate, Papago, and Microsoft Translator. A manual was the chosen document type, and Korean-to-English translation was performed. Note that only an excerpt of the document is shown in this article—the full text used for the test is confidential, so disclosure is limited.

The comparison criteria evaluated the quality in terms of the accuracy and style of the engine based on the effort put into postediting the translation. There were three points for consideration when deciding whether to retranslate a document:

- if important or technical terms were mistranslated;

- if the sentence’s main content changed significantly; and

- if the overall sentence structure was awkward and difficult to understand.

However, cases where a slightly awkward tone was produced, one minor word was mistranslated, or the desired message was not expressed sufficiently, which could be easily corrected through postediting, were excluded.

Analysis Result

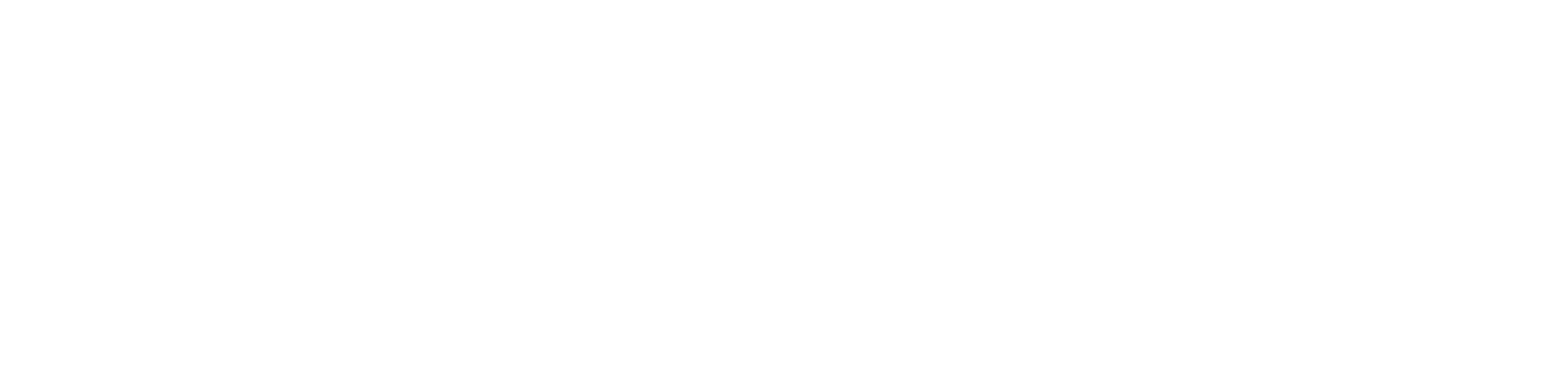

Google Translate

Google Translate produced a sentence of satisfactory quality thanks to the simplicity of the content. This may be a phenomenon in that the quality of results was relatively high compared to those of other translation engines, given the sheer amount of data Google has accumulated. However, there were cases where the coherence of paragraphs and documents was inconsistent compared to the decent quality of sentence units. An overall review was required to unify terms, endings, and tones to ensure a sufficient translation. Although translations of the manual’s terminology were more accurate than expected, this is not to say they were perfect, so they also needed review. In particular, homonyms had to be checked and translated into correct terms.

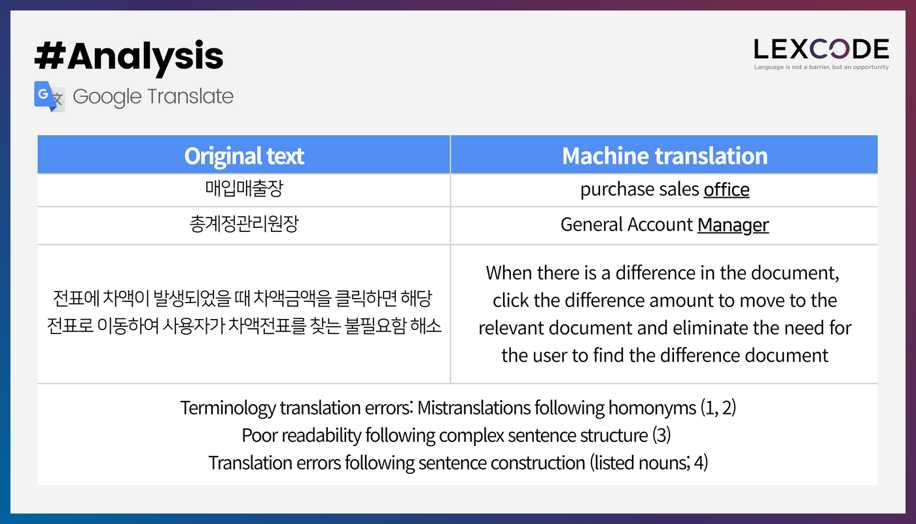

Papago

The overall quality of the translated sentences of Papago rivaled that of Google Translate, but the accuracy of terminology was poor. Terms, especially technical ones, were not uniform or accurate for each sentence, so checking and correcting them was necessary. Homonyms were an issue as well. In contrast to the straightforward sentence structure of the manual, many translations exhibited poor readability. Often, such instances could be simply corrected, but problematic sentences would also appear. In particular, sentences with continuous noun phrases yielded very poor readability, and omissions sometimes occurred in long sentences, so it was crucial to check carefully.

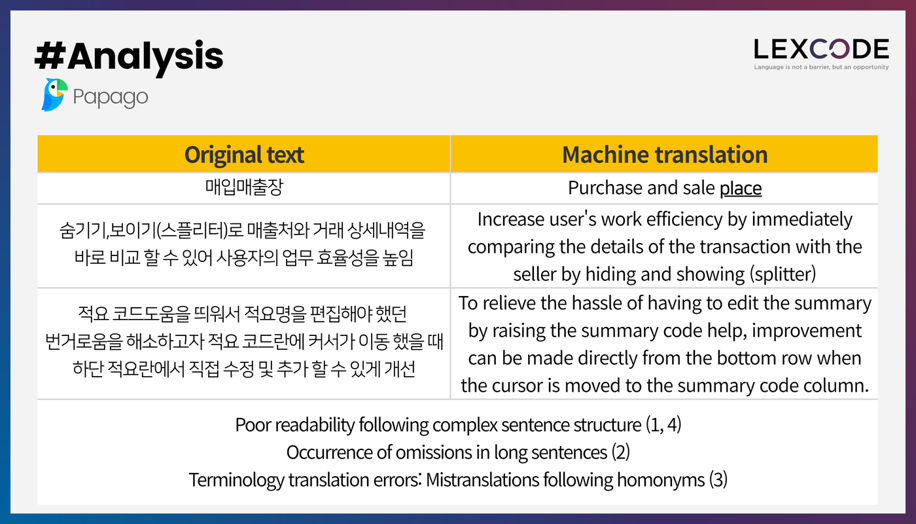

Microsoft Translator

Microsoft Translator showed inferior quality compared to the other two translation engines. Most terms, including jargon, were misused, so time-consuming corrections were expected. Most sentences with complex sentence structures had to be retranslated, and even simple sentences were rife with serious errors. In particular, when many nouns or complex compound words were concerned, translations were of poor quality or unrelated.

General Review

Based on the test results, Google Translate and Papago demonstrated fairly high quality, and their respective errors were similar. However, Google Translate was deemed more appropriate considering the need to use a CAT tool for Hyperlation’s operations. The Microsoft Translator engine was also tested with a CAT tool, but its output was difficult to use in practice.

Nevertheless, the Lexcode Hyperlation Team continues to test AI translation engines in various fields and languages, all under the mindset that human translation and AI translation are not mutually exclusive but complementary and mutually beneficial.

CEO note: Although I head a translation company, I never thought translation, by itself, was our purpose. It is merely a means of turning language barriers into opportunities, and any means are sufficient as long as they can deliver opportunities beyond language faster and more accurately. Check in on us again for another round of translation experiments for the humanities field.

For a short read on human and machine translation, I recommend you check out this article: Four reasons artificial intelligence can replace human translation and one reason it can’t

Ham Chul Young, CEO of Lexcode

[email protected]